Build event-driven data quality pipelines with AWS Glue DataBrew

As businesses collect more and more data to drive core processes like decision making, reporting and machine learning (ML), they continue to be met with difficult hurdles! Ensuring data is fit for use with no missing, malformed or incorrect content is first priority for many of these companies – and that’s where AWS Glue Databrew steps in!

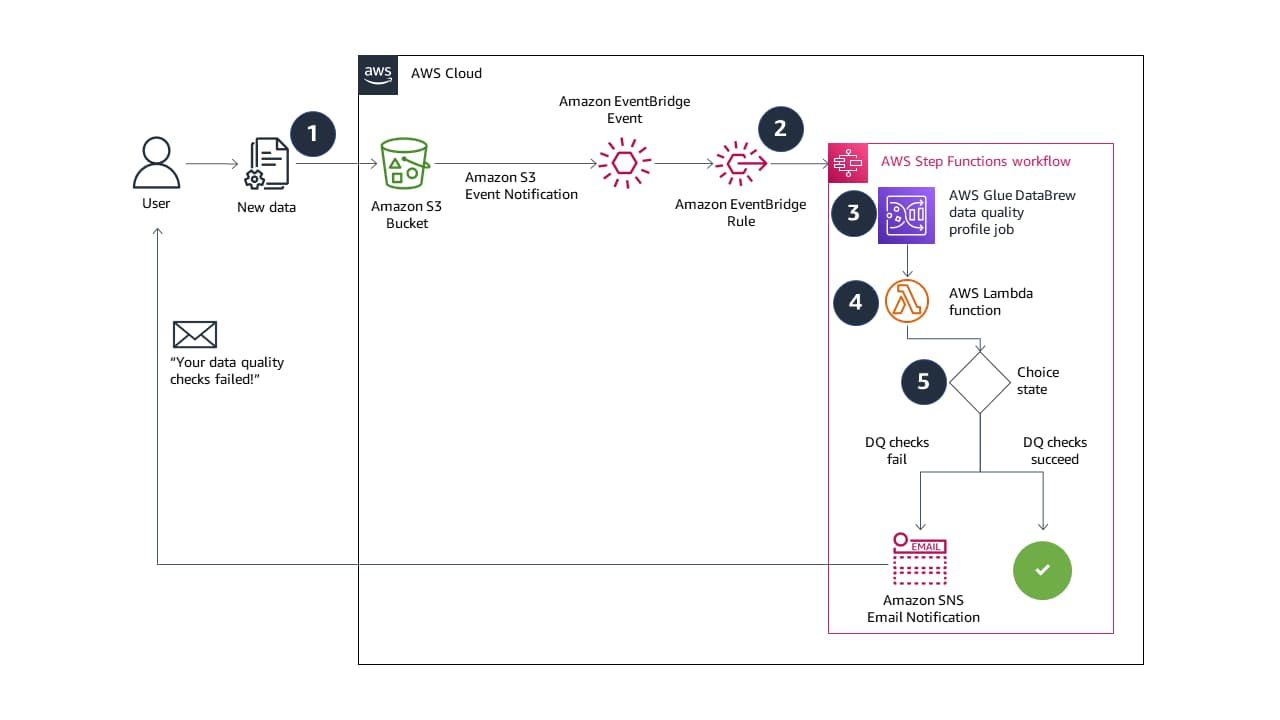

Let’s build a fully automated, end-to-end event driven pipeline for data quality validation.

Now that’s a subtitle that gets us excited! As with any data journey, it’s a rocky road to forming structured, intelligent and readily queryable data.

AWS Glue DataBrew is a visual data preparation tool that makes it easy to find data quality statistics such as duplicate values, missing values and outliers in your data. You can also set up data quality rules in DataBrew to perform conditional checks based on unique business needs.

Metin Alisho, Data Scientist at Firemind says: “Event driven pipelines make up the ‘bread and butter’ of many of our customer solutions. This AWS Glue DataBrew driven solution makes finding quality data between the outliers and duplicate values simple.”

Transforming your data, one column at a time!

These solutions using tools such as AWS Glue DataBrew mark just the beginning in what’s possible for data management and interpretation. Get in touch with us today to look at how we can work with your data.

Get in touch

Want to learn more?

Seen a specific case study or insight and want to learn more? Or thinking about your next project? Drop us a message!