Exploring the challenges of DevOps

Solutions Architect, Darren O’Flaherty, explores real world examples of DevOps integration as well as architectures that enable enhanced automation.

Working in a fast-paced DevOps environment has it’s many challenges. I can testify to this. I recently joined an organisation where DevOps practices are a central pillar to any new client delivery.

If you’re working in DevOps, you will agree that it moves at speed, changes are frequent, and the key to success is strong adaptation. Over the past decade we have really seen the emergence and adoption of DevOps, and this has in turn, spawned SecOps, GitOps, CloudOps, ITOps and DevSecOps (with many more variations to come).

For this post however, we’ll be looking at DevOps in particular.

There are many benefits to adopting DevOps practices for any business. Many view DevOps as a way to increase their speed to market, or getting new products out the door quickly (without sacrificing quality).

Customers that I work with, whether that be start-ups or large organisations, are benefitting from the expertise I offer. By removing some of the inefficiencies and effort of complex workflows, the goal is to reduce the overall cost of development of new products and services. All of which form the DevOps lifecycle.

A lot of the clients I’m working with are keen to modernise their products. And with the right guidance and expertise, I’m taking them on that journey by applying solutions that are underpinned by sound and secure DevOps practices.

Before immersing into the DevOps culture, I take customers through the process of looking at whether they are ready to adapt by assessing the current delivery environment. This helps to understand whether the team, toolsets, architectures, and culture, are adequately prepared for the shift to move their applications to the next stage.

A lot of businesses are ready, this can be seen particularly with start-ups. Their apps tend to be stateless and they have been developed with new application frameworks and can be architected on Microservices. However, sometimes the teams are too busy or are simply lacking that next recruit with enterprise class expertise required to build on AWS. That is where my Firemind, as an Advanced AWS Consulting Partner steps in.

I often find myself speaking to customers who have an application they want to move away from a typical ALB, EC2 or Database stack (on a 3-tier architecture). They usually want to move to microservices with a solid pipeline for fast iterative secure deployments into a production level environment.

I see a familiar trend with lots of businesses moving from this typical system, even legacy architecture stacks to Microservices (and always on managed services wherever possible). Teams now want IAC, Microservices and CICD pipelines to modernise, but also to let AWS do the heavy lifting, whilst their staff can focus on the product.

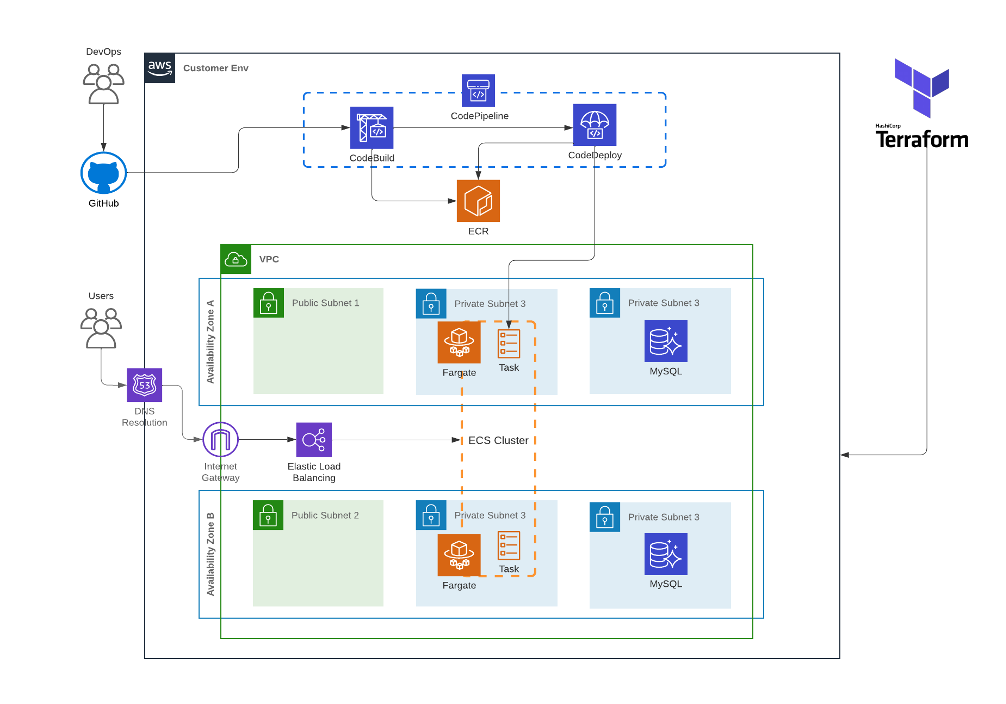

Below is an illustration that highlights what I’m typically building for more and more customers, using Terraform.

The architecture

A typical architecture consists of a VPC with 2 public, 2 private and 2 super private subnets, spanning different Availability Zones. The desired tasks in this illustration are two-fold, in that each task is deployed on separate private subnets with AWS Fargate. And each task belongs to the same ECS service. The target database architecture is Aurora MySQL, and is deployed using a super private subnet (set to Multi AZ to deliver high availability).

An Application Load Balancer is used to balance the load between the two tasks. The pipeline detects changes to the image, which is stored in ECR and uses CodeDeploy to route and deploy traffic to a Fargate cluster.

CodeDeploy uses a listener to reroute traffic to the port of the updated container specified in the build file. The pipeline is also configured to use a source location, such as GitHub, where the ECS task definition is stored.

The continuous delivery pipeline will automatically build and deploy container images whenever source code is changed, or a new base image is uploaded to ECR.

This architecture uses the following artefacts:

• A Docker image file that specifies the container name and repository URI of the ECR image repository.

• An ECS task definition that lists the Docker image name, container name, ECS service name, and load balancer configuration.

• A CodeDeploy AppSpec file that specifies the name of the ECS task definition file, the name of the updated application’s container, and the container port where CodeDeploy reroutes production traffic.It can also specify optional network configuration and Lambda functions you can run during deployment lifecycle event hooks.

Now this illustration is simply for illustration purposes, to provide an overview of what I’m building more and more for my clients. However, in a real-world deployment, I would have multiple accounts for different stages of the product lifecycle – Dev, Test, Stage, Prod – utilising a host of other services for operational excellence, security, reliability, performance efficiency cost optimisation and sustainability.

What I’m seeing from my clients is that they have a desire to move to microservices, using a myriad of managed services, all working simultaneously to deliver their ultimate goal of automation through DevOps practices.

Get in touch

Want to learn more?

Seen a specific case study or insight and want to learn more? Or thinking about your next project? Drop us a message!