Optimise Your AI Systems with Managed AI Services

- Ensure smooth performance and scalability with our fully managed AI services, tailored to your needs.

Find our managed service offering on the AWS marketplace

Our Partnerships

Managed AI

Managed AI allows you to improve AI performance, reduce downtime, and scale with confidence

Our Managed AI services ensure that your AI systems operate at their best, delivering reliable performance and seamless scalability. We take the burden of managing AI workloads off your shoulders, overseeing everything from data pipelines to cloud services. With proactive monitoring, continuous optimisation, and expert support, we help you get the most from your AI investments—minimising downtime, improving efficiency, and enabling you to focus on strategic growth. Whether you’re scaling your AI infrastructure or fine-tuning existing systems, our tailored services ensure your technology is always aligned with your business goals.

What is Managed AI

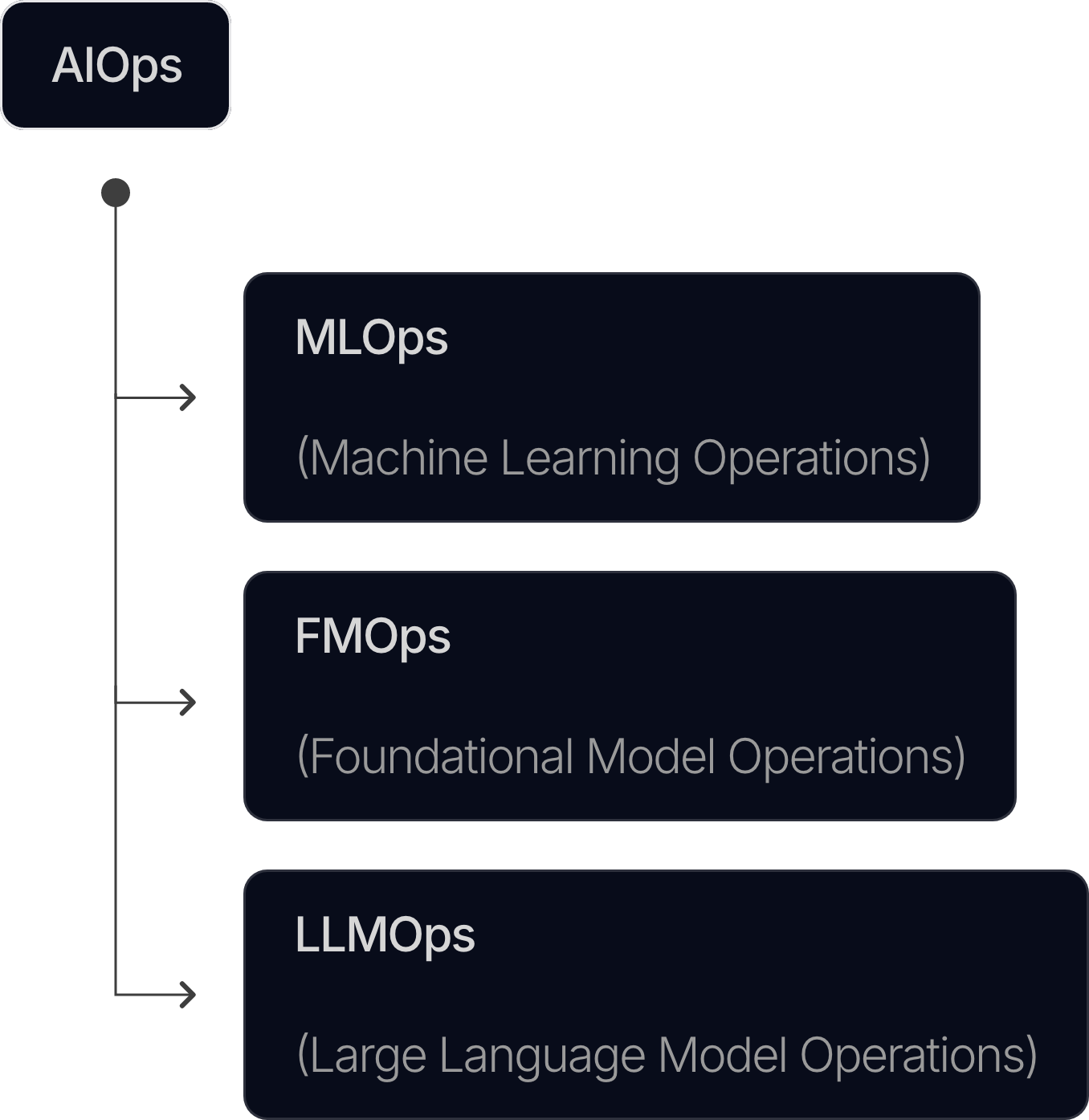

Comprehensive management of AI, ML and Gen AI workloads

Managed AI goes beyond traditional MLOps, FMOps and LLMOps, delivering a robust, end-to-end approach for overseeing data pipelines, AI applications, AI models, and cloud services.

Features

Why Managed AI matters

Ensure scalability, performance, and reliability in an evolving AI landscape.

1. Business Value Assurance

We ensure your AI systems deliver consistent business value by monitoring metrics like accuracy and proactively addressing potential issues

2. Quarterly Reviews

Our service includes regular quarterly reviews to assess your AI systems’ performance, accuracy, and relevance, ensuring they continue to meet evolving business objectives.

3. Scalable Cloud Infrastructure

We optimise and manage your cloud resources to support growing AI workloads, ensuring flexibility and cost-efficiency.

4. Incident and Problem Management

We address and resolve incidents swiftly, identifying root causes to prevent recurring issues and ensuring smooth operations.

5. Cost Optimisation Advisory

We analyse your AI operations to identify cost-saving opportunities, helping you maximise your ROI while maintaining performance.

Don't stall at production

Stay ahead of the curve by learning the most common GenAI pitfalls – and how Managed AI helps you avoid them.

Benefits

Empowering businesses with AI

With Managed AI, focus on what matters most and let us manage your workloads to ensure tangible business outcomes.

Better ROI

Ensure long-term relevance and value by adapting to evolving data and AI trends.

Sustained efficiency

Continuous optimisation to keep your infrastructure lean and your operations effective.

Enhanced productivity

Free internal teams to focus on growth, not day-to-day operations.

Managed AI engagement

End-to-End Onboarding and Continuous Support

Our Managed AI service ensures a smooth transition from your current AI setup to a fully optimised, scalable AI system. We provide continuous support throughout the lifecycle, adapting to your evolving needs and helping you realise the full potential of your AI investments.

01

Onboarding and Transition

We work closely with you to integrate your AI systems into our managed services, ensuring a seamless transition.

- Evaluate and assess your existing AI infrastructure.

- Develop a tailored onboarding plan with clear milestones.

- Align goals and priorities and assess potential risks.

02

Full management and configuration of your AI setup

Once the transition plan is set, we take full responsibility for managing and optimising the new AI setup.

- We handle all necessary documentation and ensure your team is fully informed.

- We configure the monitoring, security, and performance tools for seamless operation.

- We enable Business Value Assurance to evaluate the business impact, ensuring that AI workloads consistently deliver the expected business outcomes.

03

Go-Live and stabilisation

After go-live, we offer real-time support to ensure systems perform as expected and resolve any initial challenges.

- Monitor AI workloads for stability and performance.

- Address any immediate issues and fine-tune configurations.

- Stabilise the system for long-term performance and scalability.

04

Continuous monitoring and optimisation

Our proactive approach ensures that your AI workloads continue to perform at their peak.

- Ongoing monitoring of data pipelines, AI applications, models, and cloud services.

- AI model fine-tuning and retraining to maintain model relevance.

- Regular performance, cost, and problem management reviews to ensure continuous improvements.

05

Quarterly reviews and ongoing support

We assess your AI systems quarterly, providing insights and recommendations for future optimisations.

- Review data quality, AI model performance, and cloud infrastructure alignment.

- Provide cost-saving opportunities through our Cost Optimisation Advisory.

- Adjust services based on feedback, ensuring your AI systems meet evolving business goals.

Why Firemind

Proven track record

Partnered with AWS, we have helped organisations achieve tangible business outcomes with generative AI.

Generative AI Tools Partner Finalist 2024 & Rising Star Partner of the Year 2023

We achieved the AWS UKI Rising Star of the Year 2023 award in recognition for our work at the forefront of generative AI on AWS.

2x Generative AI competencies

We were a launch partner for the generative AI competencies and were one of the first in the world to achieve both AWS generative AI competencies.

Case study

95% Faster document

review process

Automated solution that can pull desired data from multiple documents and list them in a simple to view UI and CSV.

“The solution is significantly outperforming OpenAI, and provides much better results."

Dr. Malte Polley

AWS Cloud Solution Architect at MRH Trowe